Automatic Local Failover, Automated Recovery and Failback with 3-Node MySQL Clusters

There are a number of MySQL HA solutions around. But there are surprisingly few MySQL DR solutions. And there is only one solution that does MySQL HA right! And also does MySQL DR right, but more about that in the next blog article.

You may wonder how I qualify to talk about MySQL and especially MySQL HA solutions. I have been actively involved in the MySQL marketplace since 2001 when we first built Emic m/cluster (later Continuent m/cluster), which effectively was the predecessor of Galera (Seppo, Teemu and Alexey used to work for me).

I chose a different path for Continuent, with solutions built and based on asynchronous replication for a reason. This after we had tried another synchronous replication solution first, Continuent uni/cluster.

While synchronous replication is great for certain things, on other levels its promise is too good to be true. It’s not true, especially when building geo-distributed data services. Physics, more accurately the speed of light (translating into replication latency), comes in the way with synchronous solutions...

Even after these 20 years MySQL High Availability and Disaster Recovery is still a bit hit or miss. This is especially true with DIY types of deployments, but also true for a number of more complete MySQL HA solutions available.

Some people think that just taking a frequent backup is enough. Others focus more on performance. But then again, the scalability and performance of your MySQL database does not matter if it is not available.

So, everything starts with [high] availability and continuous operations 24/7/365: During a failure, or a disaster, but also during the maintenance. Yes, Zero Downtime Maintenance also needs to be achieved, but more about that in an upcoming blog!

There are quite a few MySQL HA solutions around. More than you might expect. The various solutions providing MySQL high-availability include (in alphabetical order):

- AWS Aurora

- Continuent Tungsten Cluster

- DIY (such as ProxySQL with Orchestrator)

- Galera Cluster

- Google Cloud SQL

- MariaDB Cluster

- MS Azure SQL

- MySQL InnoDB Cluster

- Percona XtraDB Cluster

The goal of this blog article is to showcase the simplicity of MySQL HA when “Done Right”.

Failover should be automatic and fast. “Done Right” also means we can easily and effortlessly failback to our primary database when it is once again available.

Regardless of the current solution that you may have, you may want to watch the MySQL HA Done Right! video. Or just take a look at the steps below, and compare how you are doing these similar steps with your own current MySQL HA deployment during the failure and recovery.

If any of this peaks your interest, we currently have a Competitive Comparison program that gives you access to Tungsten Cluster software free of charge during the first six months of the agreed subscription period.

We also have a special Startup Pricing program to allow access to better Enterprise-level MySQL HA solutions at a lower Startup-level cost.

You may also be interested in other blogs/videos in this series:

- MySQL DR Done Right (Part I) with Active/Passive MySQL Cluster (Blog/Video)

- MySQL DR Done Right (Part II) with Active/Active MySQL Cluster (Blog/Video - due out soon!)

- MySQL Zero Downtime Maintenance Done Right! (Blog/Video - due out soon!)

MySQL HA Deployment With Single 3-Node Cluster

This MySQL deployment assumes that you have a local highly available MySQL data service (a 3-node Tungsten Cluster). This setup also offers zero downtime maintenance, but more about that in a future blog article to follow.

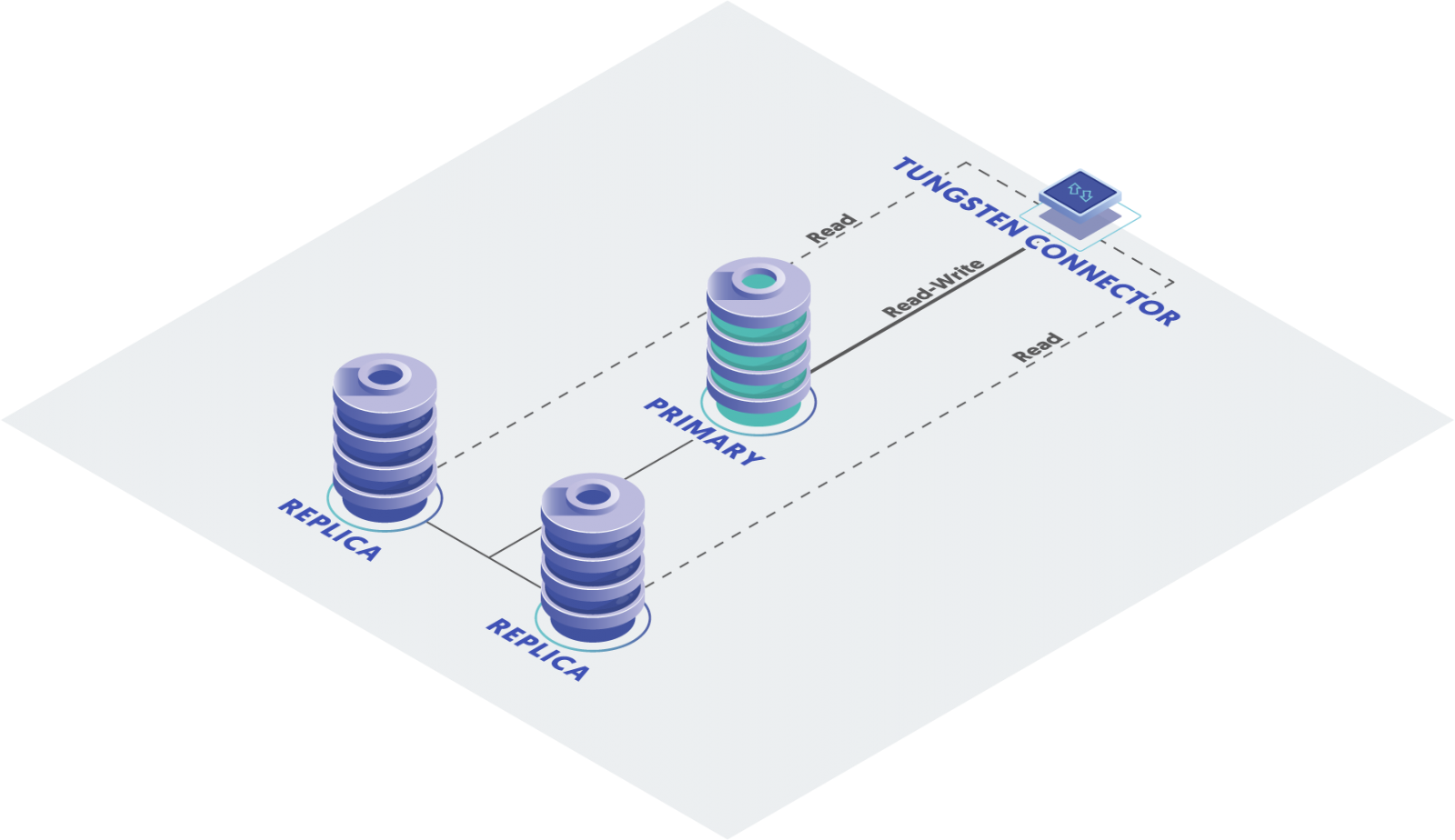

This Tungsten data service is connected to the application with an intelligent MySQL proxy. This intelligent MySQL proxy, Tungsten Proxy (aka Tungsten Connector) acts as an intermediate to intelligently route the traffic. From the application point of view, this Tungsten cluster appears as one complete MySQL data service, with read/write routing and load balancing.

While the intelligent MySQL proxy acts like a smart 'traffic cop', it would not be as powerful without a control center managing the whole operation. Someone needs to feed information for the traffic cop where to route the traffic, right? This is where the Tungsten Manager comes into play. The manager service is distributed between all MySQL nodes with one acting as the coordinator between the other manager instances. Managers know the role and state (primary, replica, relay, and shunned/failed) of each member in the complete MySQL data service. This allows Tungsten Managers to effectively orchestrate the operation of the data service together with the Tungsten Proxy.

(Along with geo-distributed Active/Passive cluster and Active/Active MySQL cluster)

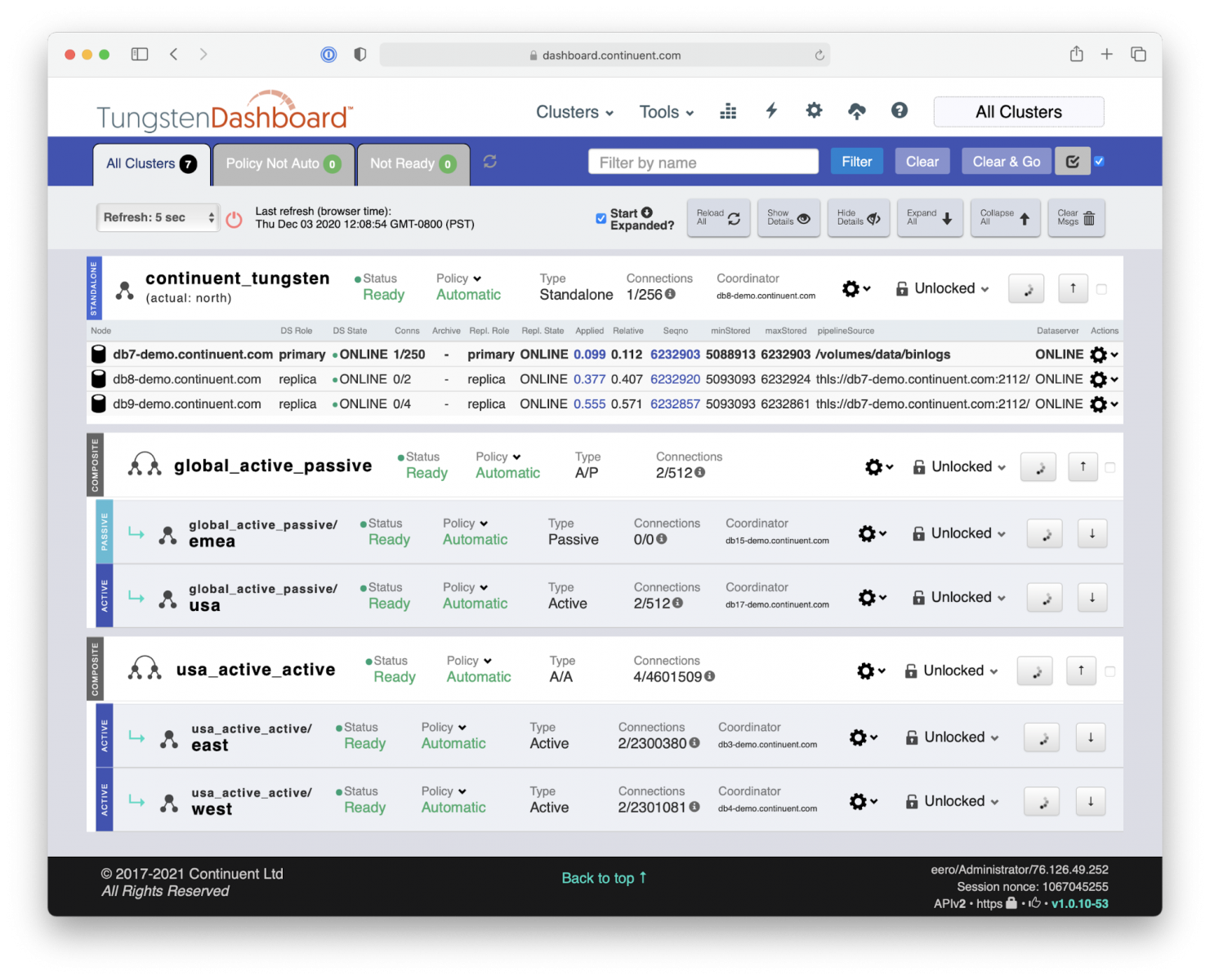

The 3-node Tungsten cluster deployment visible through Tungsten Dashboard. As you can see it is accompanied with two other data services: EMEA/USA based active/passive MySQL cluster ‘global_active_passive’ and USA-only based active/active MySQL cluster ‘us_active_active’.

Tungsten Dashboard makes it very easy and effective to manage and to monitor a large number of Tungsten MySQL clusters.

Automatic and Fast Local MySQL Failover for High Availability

Let’s take a look at what happens when the primary MySQL database fails. With the Tungsten Cluster solution, it is a remarkably uneventful occasion.

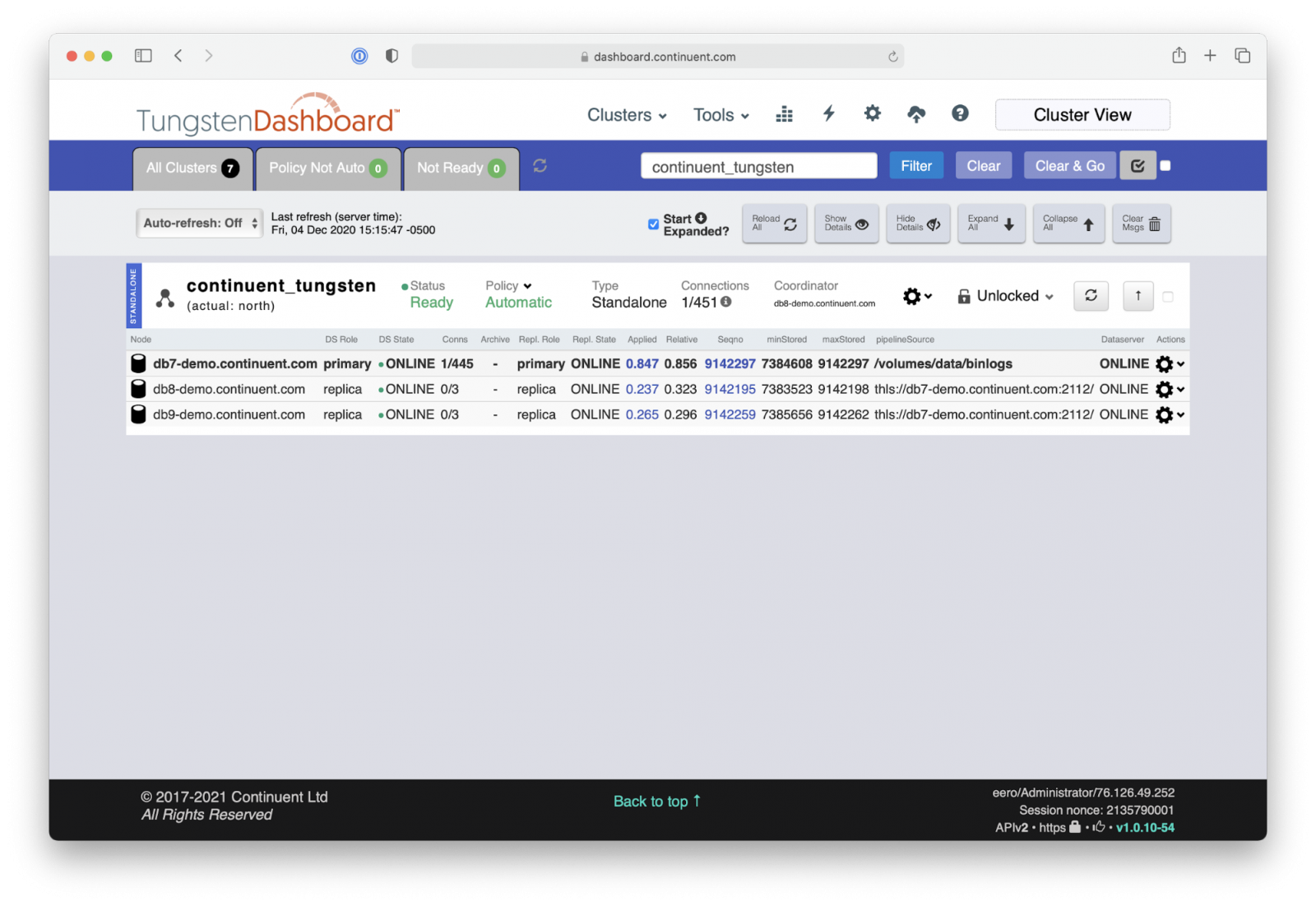

In the current deployment, the DB7 is the Primary MySQL database. It has two local replicas, DB8 and DB9.

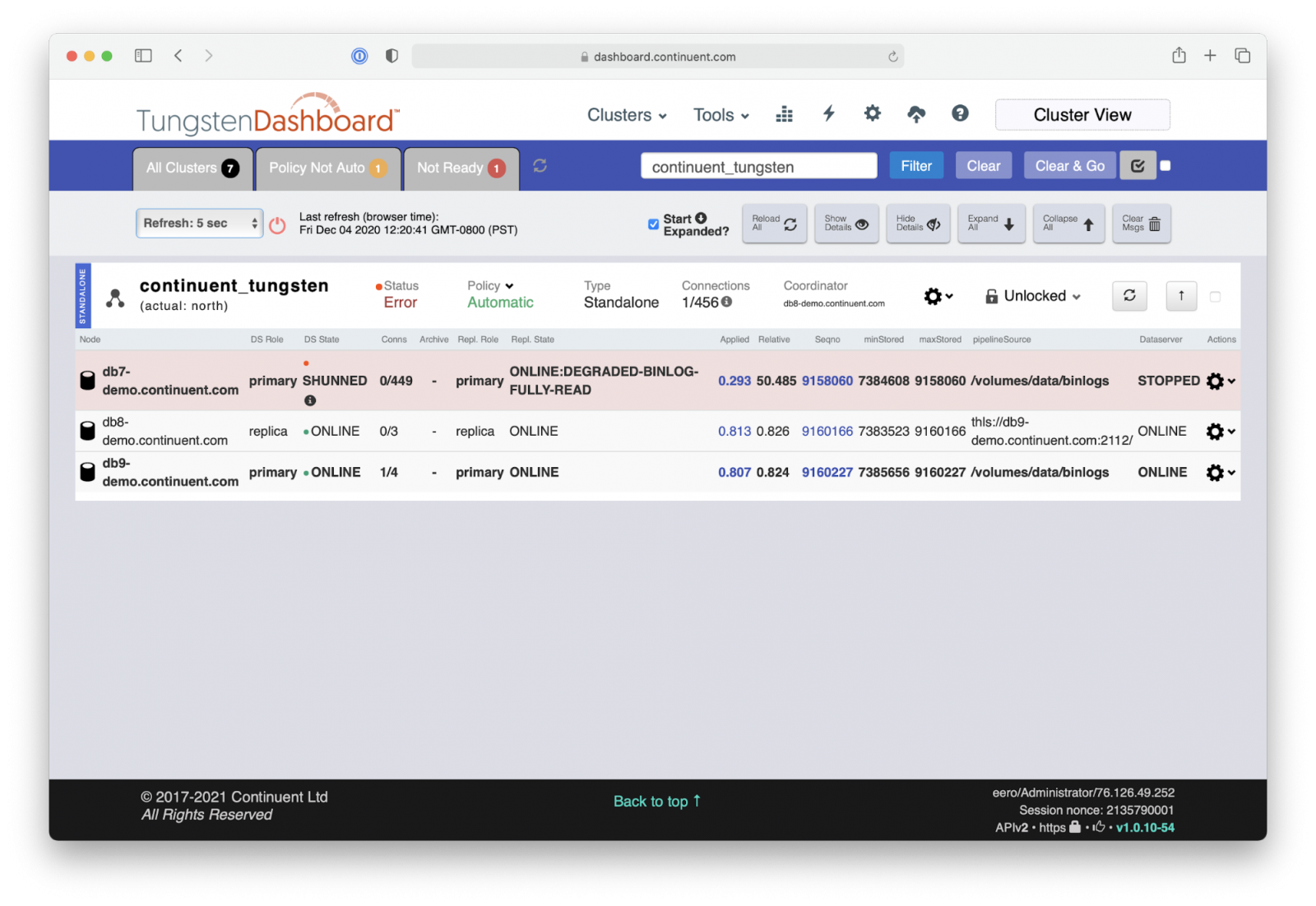

When the unlucky DB7 fails, Tungsten Manager and Tungsten Proxy kick into action.

- Tungsten Manager gets near-immediate notification through group-communication that DB7 is out of commission.

- Manager informs Tungsten Proxy to hold the traffic from the applications.

- After that Manager automatically picks the most up-to-date Replica DB9 and promotes it into the role of new Primary.

- After applying the remaining transactions to catch up with the old Primary, the new Primary database DB9 is ready to serve incoming traffic. This is typically a sub-second event.

- Manager connects the remaining Replica DB8 to the new Primary DB9 database.

- Manager notifies Proxy to open up the traffic from the applications. Voilà, we are back in action!

From the application’s point of view, there never was a failure, just a short, typically sub-second, delay with the database servicing the application. Also, no connections were lost. Very smooth operation. In seconds. No operational impact. No human intervention needed.

Since this is a simple single 3-node local cluster, no further action would be needed by the Tungsten Manager and Tungsten Proxy. Naturally, and by design, DBA intervention is needed to check out what happened to the failed database and to bring it back to the cluster.

Failure Alerts

While there was no data service interruption for the applications, we naturally do want to notify DBAs and/or Site Reliability Engineers without delay about the failure for them to take the necessary steps to fully recover the data service back into normal operation.

Tungsten Cluster can be integrated with various monitoring and alert systems of your choice, such as Nagios, Prometheus, and New Relic, and for example using PagerDuty for alerts.

Database Recovery and Failback

After we have ensured the continuous operations with the automatic failover, now is the time to clean up, recover the failed DB7 database and promote it back as a local Primary database.

Once again, all of this is automated, but naturally only after we checked and fixed what was the underlying issue causing the failure on the DB7 in the first place.

The steps to return to the original state are as follows:

- Recover DB7 and introduce it back to the cluster as a Replica.

- Promote DB7 as the Primary of the cluster

This can be achieved with two simple commands: i) ‘Recover’ applied to DB7 and ii) ‘Switch’ applied to DB7 to finish the process.

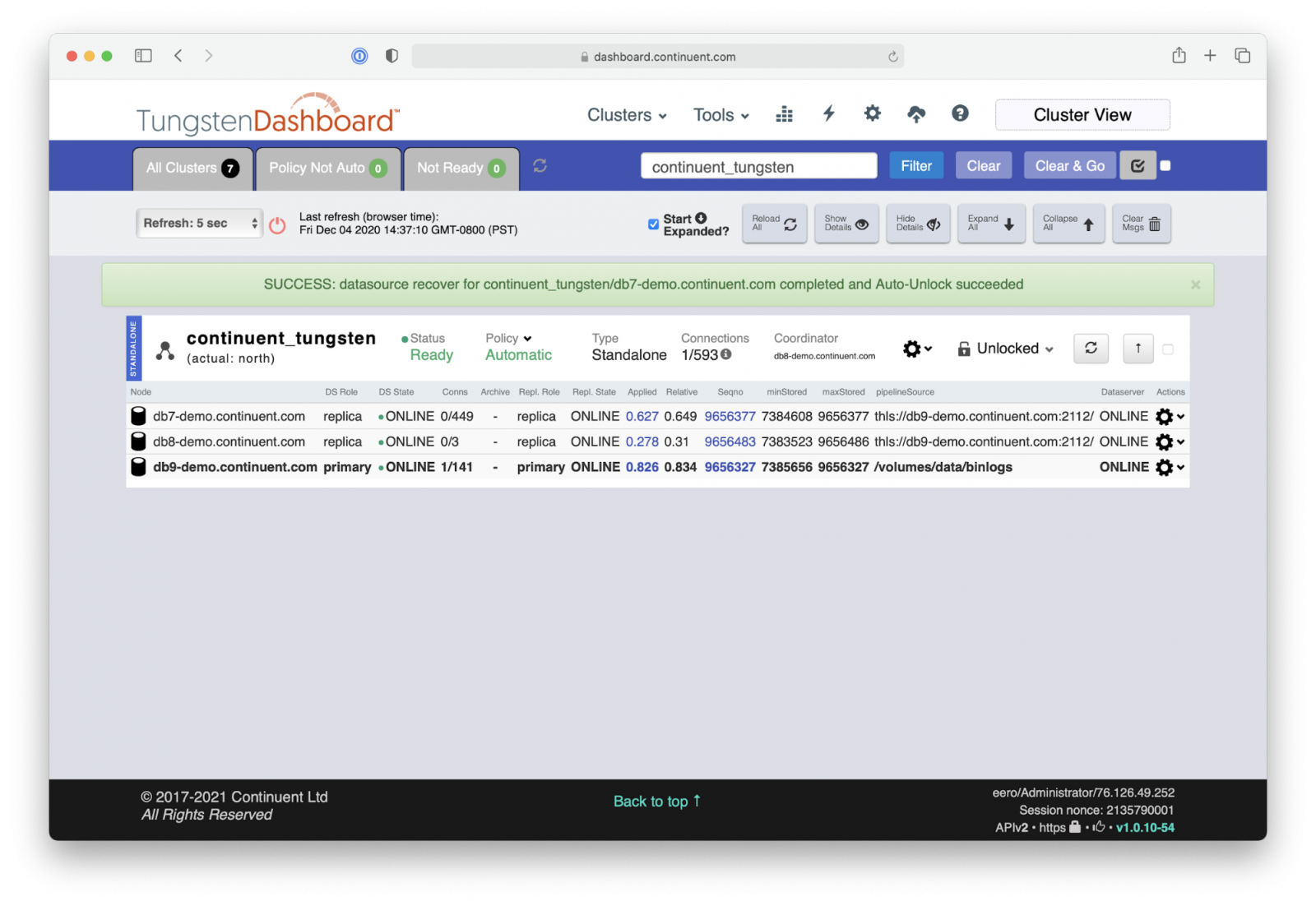

Database Recovery: Bring the DB7 back online with ‘Recover’ command.

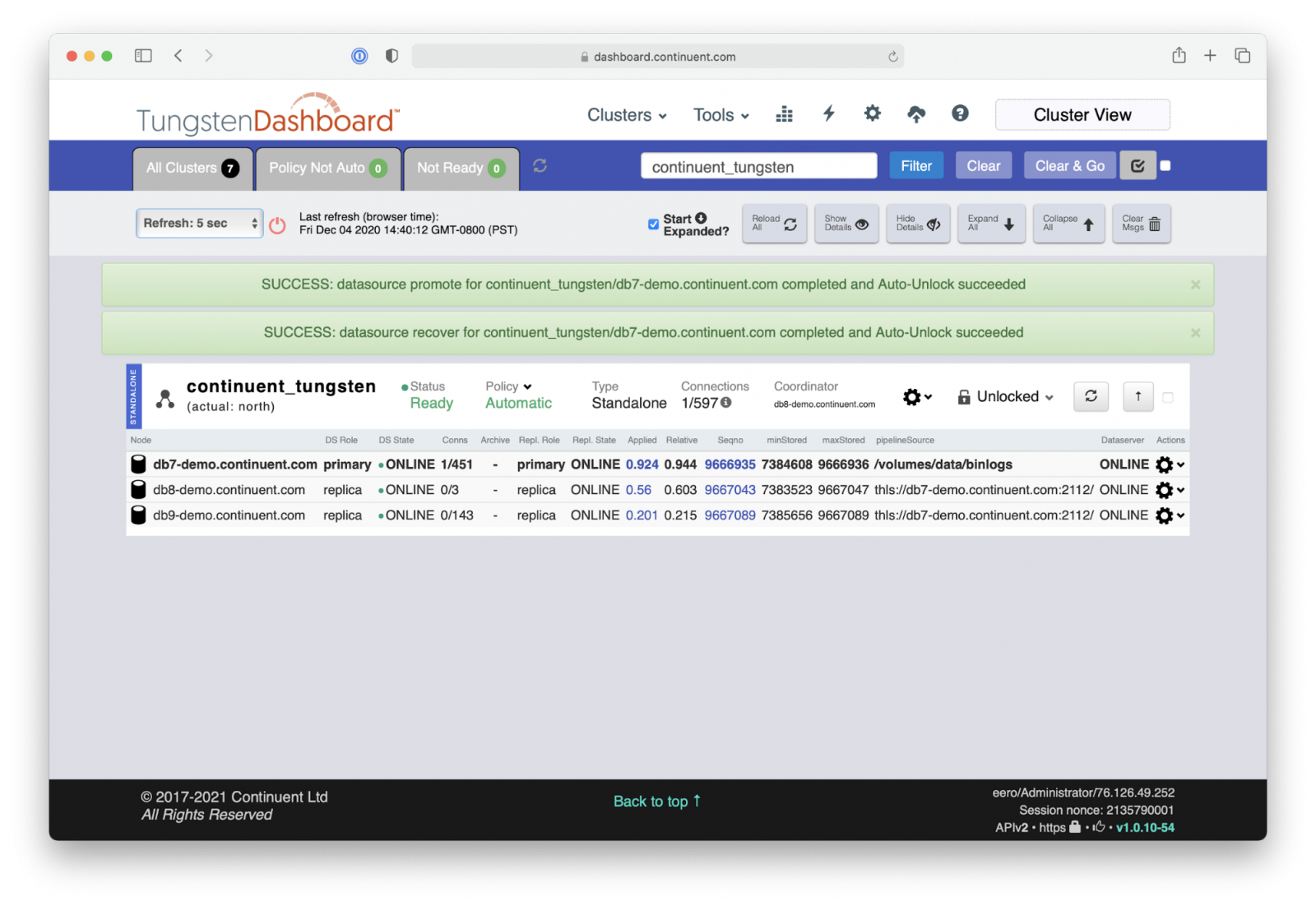

Primary Database Promotion: Promote the DB7 back as the Primary with a local ‘Switch’ command

This blog article showcased the simplicity of the MySQL HA when Done Right.

It is actually quite amazing how the orchestration of the MySQL clusters can be made so simple; all the while the applications were kept online and servicing the clients, only with an appearance of short service delays from time-to-time while Tungsten Proxy and Tungsten Manager were doing the heavy lifting of the complex change operations with seemingly effortless ease.

I hope you enjoyed it!

And if you would like to see this as a live demo, please take a look at the MySQL HA Done Right video.

Also, beside this blog you may be interested in other blogs/videos in this series:

- MySQL HA/DR Done Right (Part I) with Active/Passive MySQL Cluster (Blog/Video)

- MySQL HA/DR Done Right (Part II) with Active/Active MySQL Cluster (Blog/Video - due out soon!)

- MySQL Zero Downtime Maintenance Done Right! (Blog/Video - due out soon!)

Smooth sailing,

Eero Teerikorpi

Founder and CEO, Continuent

Comments

Add new comment