Introduction

Database proxies' most important task is to select a cluster node to execute a given client application request.

The simple proxy will always select the primary, while more clever ones can route read requests to replicas or send write statements to other primaries in active-active clusters.

The Continuent proxy, Tungsten Connector, takes this one step further, allowing fine grain control of which node, or site, will be eligible for request execution. We call this "affinity".

Affinity: explained

Affinity really does what it says: "prefer this node or cluster site for request execution; if not available fall back to another healthy node"

The notion of “node or cluster site” is determined by the cluster topology:

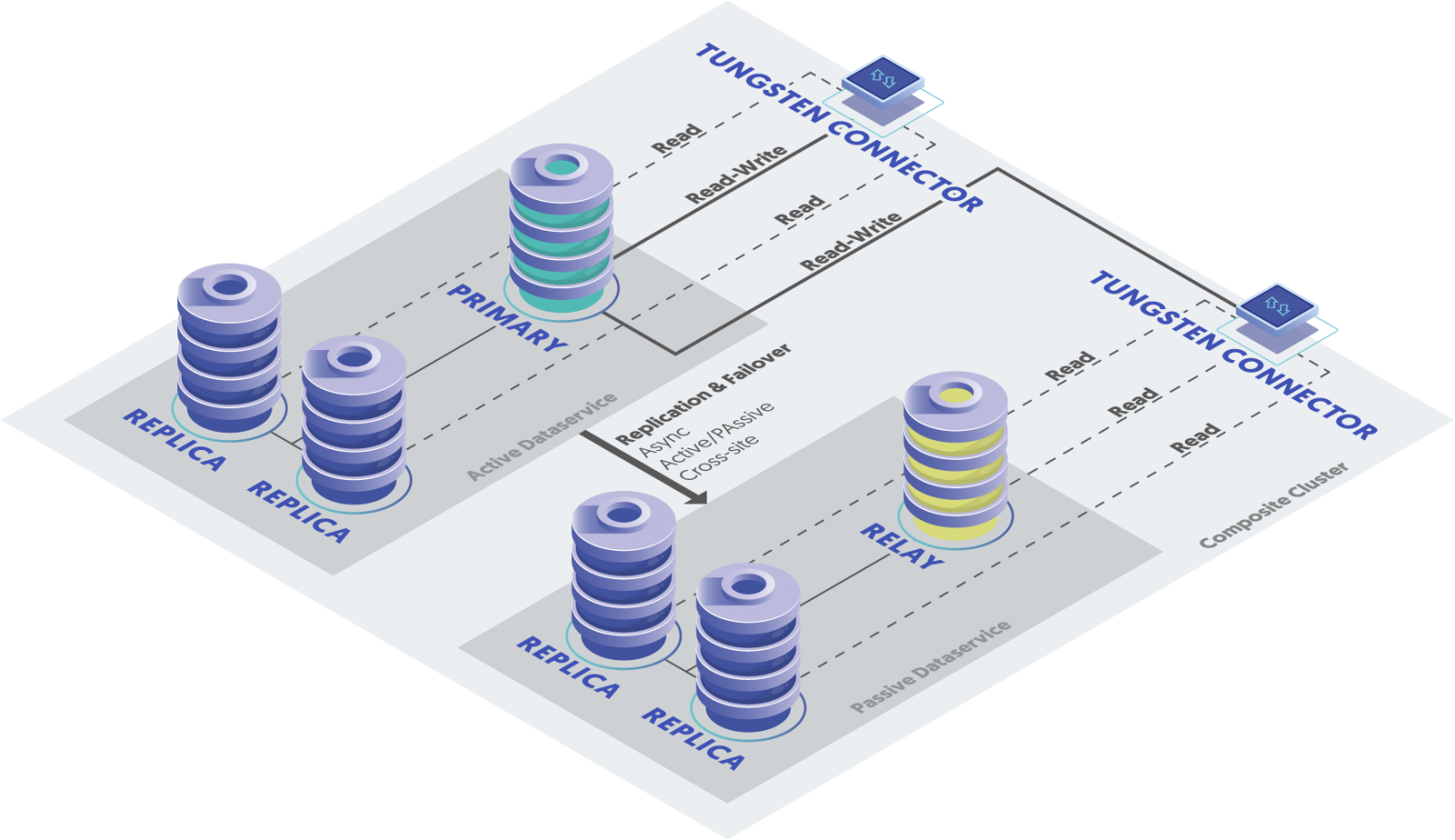

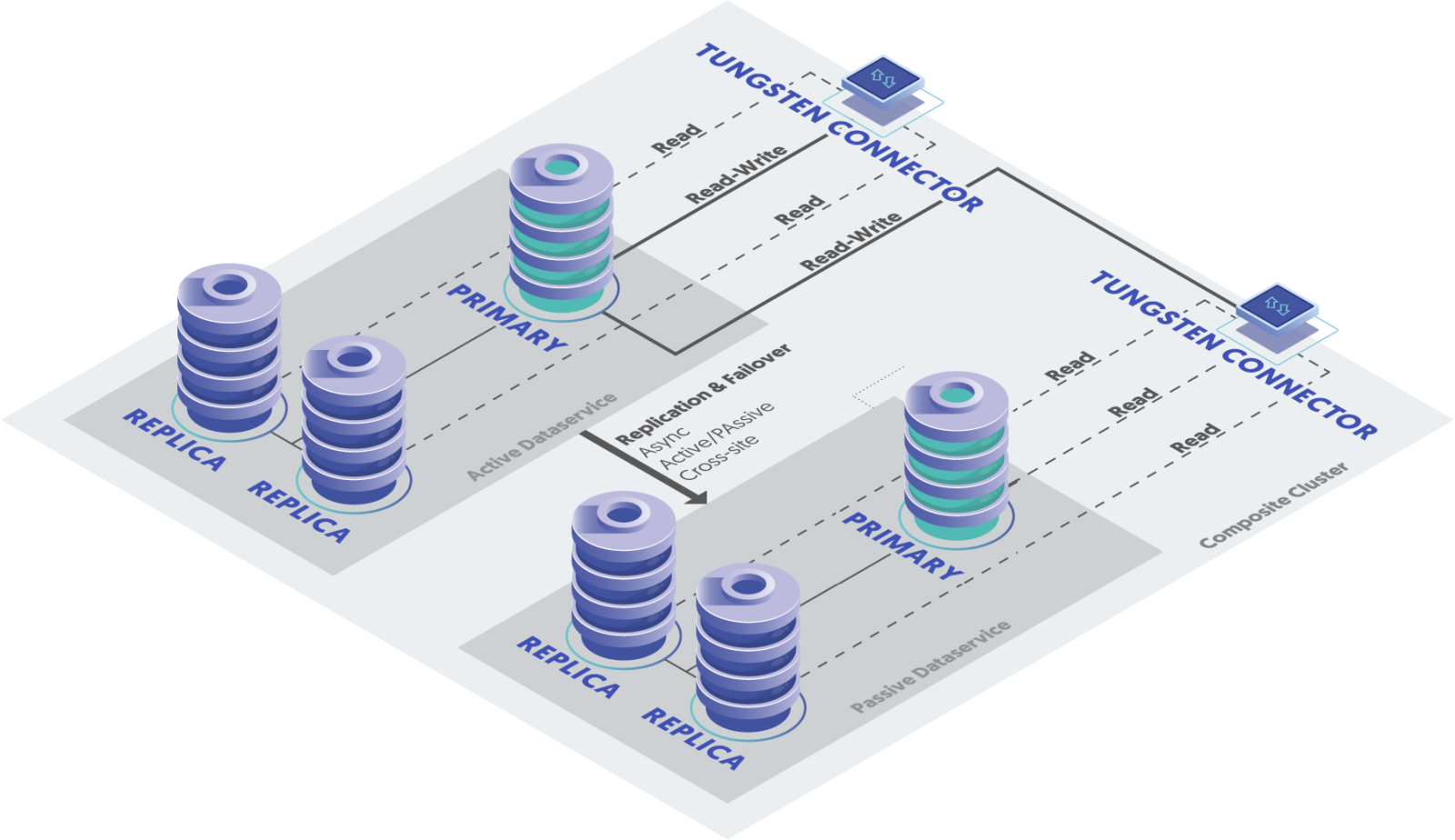

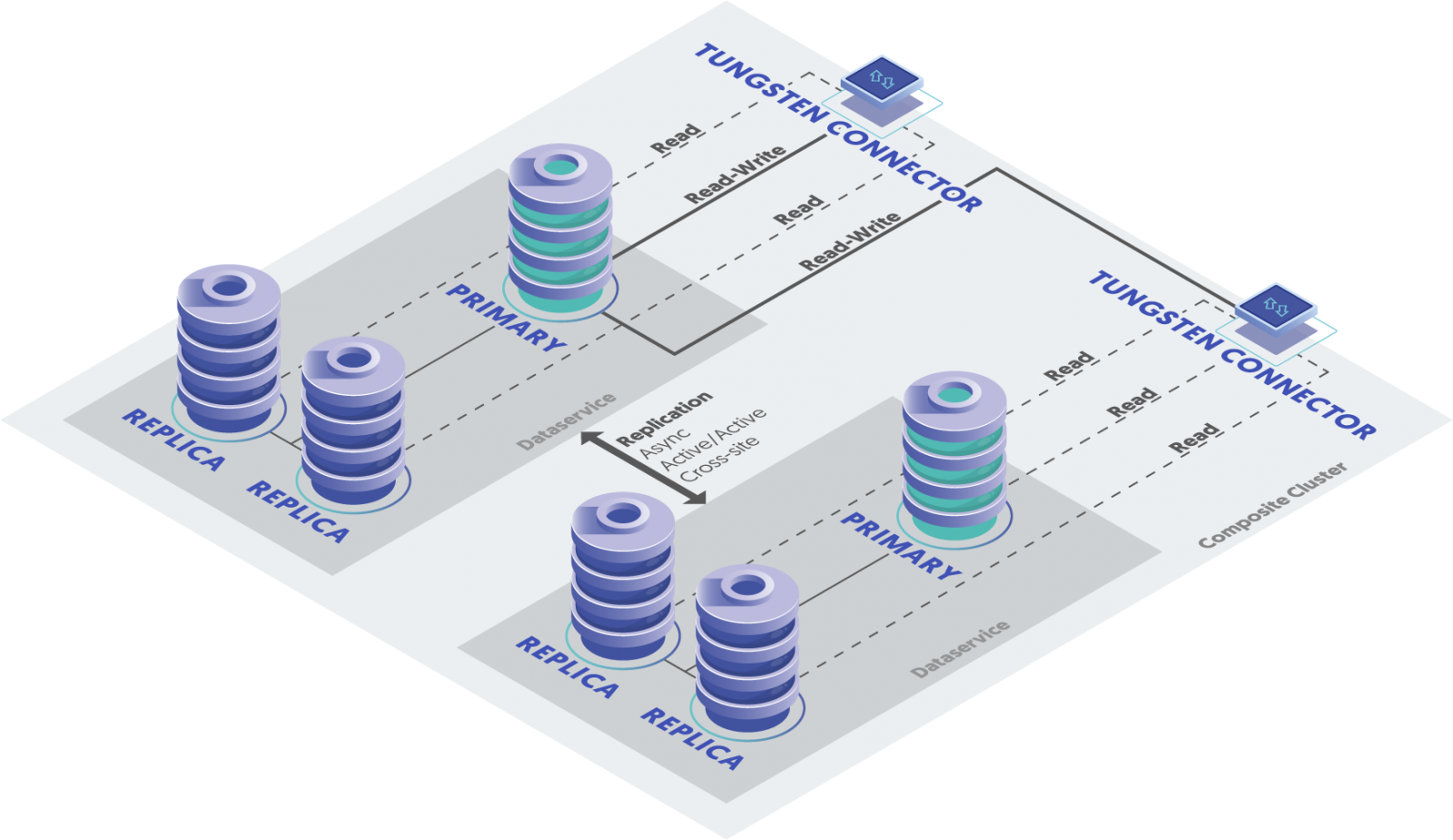

In a multi-site cluster (geo-distributed, active-active or active-passive), affinity will be set per data service name. Applications will connect to global composite data service “World” and specify affinity of “Europe”, “Asia” or “US-East”.

For single-site clusters, affinity points to a data source name, which are individual hostnames. Applications will connect to data service “local” with affinity “host2”.

In practice, we offer 3 options to set affinity:

- globally: tpm flag

connector-affinity=preferredHost(s)will set the read affinity for all incoming connector sessions (bridge and proxy modes) - per user: in user.map, specify "

user pass service preferredHost(s)" to apply the setting to a given user (proxy mode only) - per connection: under proxy mode, appending "

@affinity=preferredHost(s)" will force the affinity for this connection only

Now that we know what affinity refers to and how to set it, let's see how it can help various use cases:

Single data service: Got muscles? Use them!

Production environments that are setup with clustering and replication don't always have, nor need, beefy servers all around. One will obviously want to put more power on a primary node to handle heavy write load, but what about replicas?

In a (boring?) round robin world, you'd just align secondary nodes to the same hardware specs since they will get equivalent load on average.

Should you decide to get, or have available, serious hardware to handle read operations, Tungsten affinity will allow you to “prefer” a given computer for serving these requests. Typically, you would provide affinity=myBeefyServer as a configuration parameter

Recall that you can also give it an ordered list of preference for datasource selection, just separate the list of hosts with a comma and no space, like affinity=superPowerHost,mediumPower,lowPower.

Should you want to disallow one or many hosts in your cluster, and for example prefer to keep them free from application traffic, you can remove them from your list by simple adding a dash (-) sign in front of the their hostname: affinity=host1,host2,-hostThatShoudNotGetReads.

Multi-site: Eat local!

Affinity was primarily designed for multi-site clusters, to allow the selection of a geographic site for read operations.

In an active/passive multi-site cluster, writes must go to the active primary, period. However, with the Tungsten Connector, applications located at the relay site can benefit from closeness to the read replicas and get high speed reads by specifying affinity=localsite.

Just like in single-site clusters, it is also possible to specify a list of preferred sites: in a cluster spread all over the world, you’ll want applications based in Europe to read from the Europe dataservice. If the Europe site fails, you’ll prefer jumping over to the USA cluster rather than to Asia. Such a use case would translate into affinity=europe,usa,asia.

Same logic applies with “negative affinity”, where you can forbid access to a particular data service by adding a dash in front of the affinity string: affinity=europe,usa,-asia.

Composite Active-Active: Affinity on Writes (New!)

Active-Active environments can be pretty challenging, especially when the applications were not designed with this architecture in mind. Without a clear partitioning or strong primary keys policy, conflicts can, and will, happen.

We at Continuent have seen these cases where data can diverge because of applications not designed for active-active; we wanted to provide an easy solution to reduce, if not eliminate, the risk.

As it is often the case, the simpler is often the better: write affinity will permit all database updates to go on a single site, catching conflicts upfront, while reads remain local for best performance. This translates to affinity=siteForWrites:localSite (where siteForWrites can be the local site!).

Instant Site-Level Failover: Best of Both Worlds

Affinity on writes, described above, has one more amazing application: you can actually convert any active/passive cluster into an active/active one, while still sending writes to only one site (which used to be the primary site). In case of failure of the primary site, Tungsten Connector will re-route all the traffic to the site that remains up, providing a true instant failover capability to your cluster. Reach out to us for more info!

Online Documentation:

https://docs.continuent.com/tungsten-clustering-6.1/connector-routing-affinity.html

https://docs.continuent.com/tungsten-clustering-6.1/connector-routing-write-affinity.html

Comments

Add new comment